mirror of

https://github.com/mimblewimble/grin.git

synced 2025-05-02 07:11:15 +03:00

Mining test debug output, fixes to diff. adjustment and start of POW documentation (#69)

* Beginning to add a POW description, and some minor changes to mining testing, addition of further debug information to mining output. * Many additions to create first draft of POW documentation * Fixes to difficult adjustments by adding a MINIMUM_DIFFICULTY consensus value. Otherwise never adjusted above 1 due to flooring.

This commit is contained in:

parent

5f8a0d9f1c

commit

97b7421ce0

18 changed files with 295 additions and 37 deletions

|

|

@ -60,6 +60,10 @@ pub const CUT_THROUGH_HORIZON: u32 = 48 * 3600 / (BLOCK_TIME_SEC as u32);

|

||||||

/// peer-to-peer networking layer only for DoS protection.

|

/// peer-to-peer networking layer only for DoS protection.

|

||||||

pub const MAX_MSG_LEN: u64 = 20_000_000;

|

pub const MAX_MSG_LEN: u64 = 20_000_000;

|

||||||

|

|

||||||

|

/// The minimum mining difficulty we'll allow

|

||||||

|

|

||||||

|

pub const MINIMUM_DIFFICULTY: u32 = 10;

|

||||||

|

|

||||||

pub const MEDIAN_TIME_WINDOW: u32 = 11;

|

pub const MEDIAN_TIME_WINDOW: u32 = 11;

|

||||||

|

|

||||||

pub const DIFFICULTY_ADJUST_WINDOW: u32 = 23;

|

pub const DIFFICULTY_ADJUST_WINDOW: u32 = 23;

|

||||||

|

|

@ -126,7 +130,7 @@ pub fn next_difficulty<T>(cursor: T) -> Result<Difficulty, TargetError>

|

||||||

|

|

||||||

// Check we have enough blocks

|

// Check we have enough blocks

|

||||||

if window_end.len() < (MEDIAN_TIME_WINDOW as usize) {

|

if window_end.len() < (MEDIAN_TIME_WINDOW as usize) {

|

||||||

return Ok(Difficulty::one());

|

return Ok(Difficulty::from_num(MINIMUM_DIFFICULTY));

|

||||||

}

|

}

|

||||||

|

|

||||||

// Calculating time medians at the beginning and end of the window.

|

// Calculating time medians at the beginning and end of the window.

|

||||||

|

|

@ -136,7 +140,7 @@ pub fn next_difficulty<T>(cursor: T) -> Result<Difficulty, TargetError>

|

||||||

let end_ts = window_end[window_end.len() / 2];

|

let end_ts = window_end[window_end.len() / 2];

|

||||||

|

|

||||||

// Average difficulty and dampened average time

|

// Average difficulty and dampened average time

|

||||||

let diff_avg = diff_sum / Difficulty::from_num(DIFFICULTY_ADJUST_WINDOW);

|

let diff_avg = diff_sum.clone() / Difficulty::from_num(DIFFICULTY_ADJUST_WINDOW);

|

||||||

let ts_damp = (3 * BLOCK_TIME_WINDOW + (begin_ts - end_ts)) / 4;

|

let ts_damp = (3 * BLOCK_TIME_WINDOW + (begin_ts - end_ts)) / 4;

|

||||||

|

|

||||||

// Apply time bounds

|

// Apply time bounds

|

||||||

|

|

@ -148,7 +152,6 @@ pub fn next_difficulty<T>(cursor: T) -> Result<Difficulty, TargetError>

|

||||||

ts_damp

|

ts_damp

|

||||||

};

|

};

|

||||||

|

|

||||||

// Final ratio calculation

|

|

||||||

Ok(diff_avg * Difficulty::from_num(BLOCK_TIME_WINDOW as u32) /

|

Ok(diff_avg * Difficulty::from_num(BLOCK_TIME_WINDOW as u32) /

|

||||||

Difficulty::from_num(adj_ts as u32))

|

Difficulty::from_num(adj_ts as u32))

|

||||||

}

|

}

|

||||||

|

|

@ -185,13 +188,16 @@ mod test {

|

||||||

#[test]

|

#[test]

|

||||||

fn next_target_adjustment() {

|

fn next_target_adjustment() {

|

||||||

// not enough data

|

// not enough data

|

||||||

assert_eq!(next_difficulty(vec![]).unwrap(), Difficulty::one());

|

assert_eq!(next_difficulty(vec![]).unwrap(), Difficulty::from_num(MINIMUM_DIFFICULTY));

|

||||||

|

|

||||||

assert_eq!(next_difficulty(vec![Ok((60, Difficulty::one()))]).unwrap(),

|

assert_eq!(next_difficulty(vec![Ok((60, Difficulty::one()))]).unwrap(),

|

||||||

Difficulty::one());

|

Difficulty::from_num(MINIMUM_DIFFICULTY));

|

||||||

|

|

||||||

assert_eq!(next_difficulty(repeat(60, 10, DIFFICULTY_ADJUST_WINDOW)).unwrap(),

|

assert_eq!(next_difficulty(repeat(60, 10, DIFFICULTY_ADJUST_WINDOW)).unwrap(),

|

||||||

Difficulty::one());

|

Difficulty::from_num(MINIMUM_DIFFICULTY));

|

||||||

|

|

||||||

// just enough data, right interval, should stay constant

|

// just enough data, right interval, should stay constant

|

||||||

|

|

||||||

let just_enough = DIFFICULTY_ADJUST_WINDOW + MEDIAN_TIME_WINDOW;

|

let just_enough = DIFFICULTY_ADJUST_WINDOW + MEDIAN_TIME_WINDOW;

|

||||||

assert_eq!(next_difficulty(repeat(60, 1000, just_enough)).unwrap(),

|

assert_eq!(next_difficulty(repeat(60, 1000, just_enough)).unwrap(),

|

||||||

Difficulty::from_num(1000));

|

Difficulty::from_num(1000));

|

||||||

|

|

|

||||||

|

|

@ -23,6 +23,7 @@ use core::Committed;

|

||||||

use core::{Input, Output, Proof, TxKernel, Transaction, COINBASE_KERNEL, COINBASE_OUTPUT};

|

use core::{Input, Output, Proof, TxKernel, Transaction, COINBASE_KERNEL, COINBASE_OUTPUT};

|

||||||

use core::transaction::merkle_inputs_outputs;

|

use core::transaction::merkle_inputs_outputs;

|

||||||

use consensus::REWARD;

|

use consensus::REWARD;

|

||||||

|

use consensus::MINIMUM_DIFFICULTY;

|

||||||

use core::hash::{Hash, Hashed, ZERO_HASH};

|

use core::hash::{Hash, Hashed, ZERO_HASH};

|

||||||

use core::target::Difficulty;

|

use core::target::Difficulty;

|

||||||

use ser::{self, Readable, Reader, Writeable, Writer};

|

use ser::{self, Readable, Reader, Writeable, Writer};

|

||||||

|

|

@ -65,8 +66,8 @@ impl Default for BlockHeader {

|

||||||

height: 0,

|

height: 0,

|

||||||

previous: ZERO_HASH,

|

previous: ZERO_HASH,

|

||||||

timestamp: time::at_utc(time::Timespec { sec: 0, nsec: 0 }),

|

timestamp: time::at_utc(time::Timespec { sec: 0, nsec: 0 }),

|

||||||

difficulty: Difficulty::one(),

|

difficulty: Difficulty::from_num(MINIMUM_DIFFICULTY),

|

||||||

total_difficulty: Difficulty::one(),

|

total_difficulty: Difficulty::from_num(MINIMUM_DIFFICULTY),

|

||||||

utxo_merkle: ZERO_HASH,

|

utxo_merkle: ZERO_HASH,

|

||||||

tx_merkle: ZERO_HASH,

|

tx_merkle: ZERO_HASH,

|

||||||

features: DEFAULT_BLOCK,

|

features: DEFAULT_BLOCK,

|

||||||

|

|

|

||||||

|

|

@ -17,7 +17,7 @@

|

||||||

//! the related difficulty, defined as the maximum target divided by the hash.

|

//! the related difficulty, defined as the maximum target divided by the hash.

|

||||||

|

|

||||||

use std::fmt;

|

use std::fmt;

|

||||||

use std::ops::{Add, Mul, Div};

|

use std::ops::{Add, Mul, Div, Sub};

|

||||||

|

|

||||||

use bigint::BigUint;

|

use bigint::BigUint;

|

||||||

use serde::{Serialize, Serializer, Deserialize, Deserializer, de};

|

use serde::{Serialize, Serializer, Deserialize, Deserializer, de};

|

||||||

|

|

@ -86,6 +86,13 @@ impl Add<Difficulty> for Difficulty {

|

||||||

}

|

}

|

||||||

}

|

}

|

||||||

|

|

||||||

|

impl Sub<Difficulty> for Difficulty {

|

||||||

|

type Output = Difficulty;

|

||||||

|

fn sub(self, other: Difficulty) -> Difficulty {

|

||||||

|

Difficulty { num: self.num - other.num }

|

||||||

|

}

|

||||||

|

}

|

||||||

|

|

||||||

impl Mul<Difficulty> for Difficulty {

|

impl Mul<Difficulty> for Difficulty {

|

||||||

type Output = Difficulty;

|

type Output = Difficulty;

|

||||||

fn mul(self, other: Difficulty) -> Difficulty {

|

fn mul(self, other: Difficulty) -> Difficulty {

|

||||||

|

|

|

||||||

|

|

@ -18,6 +18,7 @@ use time;

|

||||||

|

|

||||||

use core;

|

use core;

|

||||||

use consensus::DEFAULT_SIZESHIFT;

|

use consensus::DEFAULT_SIZESHIFT;

|

||||||

|

use consensus::MINIMUM_DIFFICULTY;

|

||||||

use core::hash::Hashed;

|

use core::hash::Hashed;

|

||||||

use core::target::Difficulty;

|

use core::target::Difficulty;

|

||||||

|

|

||||||

|

|

@ -34,8 +35,8 @@ pub fn genesis() -> core::Block {

|

||||||

tm_mday: 4,

|

tm_mday: 4,

|

||||||

..time::empty_tm()

|

..time::empty_tm()

|

||||||

},

|

},

|

||||||

difficulty: Difficulty::one(),

|

difficulty: Difficulty::from_num(MINIMUM_DIFFICULTY),

|

||||||

total_difficulty: Difficulty::one(),

|

total_difficulty: Difficulty::from_num(MINIMUM_DIFFICULTY),

|

||||||

utxo_merkle: [].hash(),

|

utxo_merkle: [].hash(),

|

||||||

tx_merkle: [].hash(),

|

tx_merkle: [].hash(),

|

||||||

features: core::DEFAULT_BLOCK,

|

features: core::DEFAULT_BLOCK,

|

||||||

|

|

|

||||||

|

|

@ -28,6 +28,7 @@ pub mod cuckoo;

|

||||||

use time;

|

use time;

|

||||||

|

|

||||||

use consensus::EASINESS;

|

use consensus::EASINESS;

|

||||||

|

use consensus::MINIMUM_DIFFICULTY;

|

||||||

use core::BlockHeader;

|

use core::BlockHeader;

|

||||||

use core::hash::Hashed;

|

use core::hash::Hashed;

|

||||||

use core::target::Difficulty;

|

use core::target::Difficulty;

|

||||||

|

|

@ -92,9 +93,9 @@ mod test {

|

||||||

fn genesis_pow() {

|

fn genesis_pow() {

|

||||||

let mut b = genesis::genesis();

|

let mut b = genesis::genesis();

|

||||||

b.header.nonce = 310;

|

b.header.nonce = 310;

|

||||||

pow_size(&mut b.header, Difficulty::one(), 12).unwrap();

|

pow_size(&mut b.header, Difficulty::from_num(MINIMUM_DIFFICULTY), 12).unwrap();

|

||||||

assert!(b.header.nonce != 310);

|

assert!(b.header.nonce != 310);

|

||||||

assert!(b.header.pow.to_difficulty() >= Difficulty::one());

|

assert!(b.header.pow.to_difficulty() >= Difficulty::from_num(MINIMUM_DIFFICULTY));

|

||||||

assert!(verify_size(&b.header, 12));

|

assert!(verify_size(&b.header, 12));

|

||||||

}

|

}

|

||||||

}

|

}

|

||||||

|

|

|

||||||

BIN

doc/pow/images/cuckoo_base.png

Normal file

BIN

doc/pow/images/cuckoo_base.png

Normal file

Binary file not shown.

|

After

(image error) Size: 29 KiB |

BIN

doc/pow/images/cuckoo_base_numbered.png

Normal file

BIN

doc/pow/images/cuckoo_base_numbered.png

Normal file

Binary file not shown.

|

After

(image error) Size: 29 KiB |

BIN

doc/pow/images/cuckoo_base_numbered_few_edges.png

Normal file

BIN

doc/pow/images/cuckoo_base_numbered_few_edges.png

Normal file

Binary file not shown.

|

After

(image error) Size: 13 KiB |

BIN

doc/pow/images/cuckoo_base_numbered_few_edges_cycle.png

Normal file

BIN

doc/pow/images/cuckoo_base_numbered_few_edges_cycle.png

Normal file

Binary file not shown.

|

After

(image error) Size: 13 KiB |

BIN

doc/pow/images/cuckoo_base_numbered_many.png

Normal file

BIN

doc/pow/images/cuckoo_base_numbered_many.png

Normal file

Binary file not shown.

|

After

(image error) Size: 35 KiB |

BIN

doc/pow/images/cuckoo_base_numbered_many_edges.png

Normal file

BIN

doc/pow/images/cuckoo_base_numbered_many_edges.png

Normal file

Binary file not shown.

|

After

(image error) Size: 15 KiB |

BIN

doc/pow/images/cuckoo_base_numbered_minimal.png

Normal file

BIN

doc/pow/images/cuckoo_base_numbered_minimal.png

Normal file

Binary file not shown.

|

After

(image error) Size: 12 KiB |

212

doc/pow/pow.md

Normal file

212

doc/pow/pow.md

Normal file

|

|

@ -0,0 +1,212 @@

|

||||||

|

Grin's Proof-of-Work

|

||||||

|

====================

|

||||||

|

|

||||||

|

[WIP and subject to review, may still contain errors]

|

||||||

|

|

||||||

|

This document is meant to outline, at a level suitable for someone without prior knowledge,

|

||||||

|

the algorithms and processes currently involved in Grin's Proof-of-Work system. We'll start

|

||||||

|

with a general overview of cycles in a graph and the Cuckoo Cycle algorithm which forms the

|

||||||

|

basis of Grin's proof-of-work. We'll then move on to Grin-specific details, which will outline

|

||||||

|

the other systems that combine with Cuckoo Cycles to form the entirety of mining in Grin.

|

||||||

|

|

||||||

|

Please note that Grin is currently under active development, and any and all of this is subject to

|

||||||

|

(and will) change before a general release.

|

||||||

|

|

||||||

|

# Graphs and Cuckoo Cycles

|

||||||

|

|

||||||

|

Grin's basic Proof-of-Work algorithm is called Cuckoo Cycle, which is specifically designed

|

||||||

|

to be resistant to Bitcoin style hardware arms-races. It is primarily a memory bound algorithm,

|

||||||

|

which, (at least in theory,) means that solution time is limited to the speed of a system's RAM

|

||||||

|

rather than processor or GPU speed. As such, mining Cuckoo Cycle solutions should be viable on

|

||||||

|

most commodity hardware, and require far less energy than most other GPU, CPU or ASIC-bound

|

||||||

|

proof of work algorithms.

|

||||||

|

|

||||||

|

The Cuckoo Cycle POW is the work of John Tromp, and the most up-to-date documentation and implementations

|

||||||

|

can be found in [his github repository](https://github.com/tromp/cuckoo). The

|

||||||

|

[white paper](https://github.com/tromp/cuckoo/blob/master/doc/cuckoo.pdf) is the best source of

|

||||||

|

further technical details.

|

||||||

|

|

||||||

|

## Cycles in a Graph

|

||||||

|

|

||||||

|

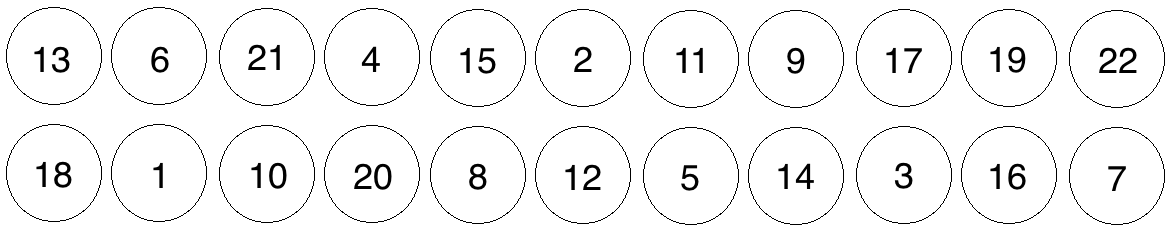

Cuckoo Cycles is an algorithm meant to detect cycles in a random bipartite graphs graph of N nodes and M edges.

|

||||||

|

In plainer terms, a Node is simply an element storing a value, an Edge is a line connecting two nodes,

|

||||||

|

and a graph is bipartite when it's split into two groupings. The simple

|

||||||

|

graph below, with values placed at random, denotes just such a graph, with 8 Nodes storing 8 values

|

||||||

|

divided into 2 groups (one row on top and one row on the bottom,) and zero Edges (i.e. no lines

|

||||||

|

connecting any nodes.)

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

*A graph of 8 Nodes with Zero Edges*

|

||||||

|

|

||||||

|

Let's throw a few Edges into the graph now, randomly:

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

*8 Nodes with 4 Edges*

|

||||||

|

|

||||||

|

We now have a randomly-generated graph with 8 nodes (N) and 4 edges (M), or an NxM graph where

|

||||||

|

N=8 and M=4. Our basic Proof-of-Work is now concerned with finding 'cycles' of a certain length

|

||||||

|

within this random graph, or, put simply, a path of connected nodes. So, if we were looking

|

||||||

|

for a cycle of length 3 (a path connecting 3 nodes), one can be detected in this graph,

|

||||||

|

i.e. the path running from 5 to 6 to 3:

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

*Cycle found*

|

||||||

|

|

||||||

|

Adjusting the number of Edges M relative to the number of Nodes N changes the difficulty of the

|

||||||

|

cycle-finding problem, and the probability that a cycle exists in the current graph. For instance,

|

||||||

|

if our POW problem were to find a cycle of length 5 in the graph, the current difficulty of 5/8 (M/N)

|

||||||

|

would mean that all 4 edges would need to be randomly generated in a perfect cycle in order for

|

||||||

|

there to be a solution. If you increase the number of edges relative to the number of nodes,

|

||||||

|

you increase the probability that a solution exists:

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

*MxN = 9x8 - Cycle of length 5 found*

|

||||||

|

|

||||||

|

So modifying the ratio M/N changes the number of expected occurrences of a cycle within a randomly

|

||||||

|

generated graph.

|

||||||

|

|

||||||

|

For a small graph such as the one above, determining whether a cycle of a certain length exists is trivial.

|

||||||

|

But as the graphs get larger, detecting such cycles becomes more difficult. For instance, does this

|

||||||

|

graph have a cycle of length 7, i.e. 7 directly connected nodes?

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

*Meat-space Cycle Detection exercise*

|

||||||

|

|

||||||

|

The answer is left as an exercise to the reader, but the overall takeaway is that detecting such cycles becomes

|

||||||

|

a more difficult exercise as the size of a graph grows. It also becomes easier as M/N becomes larger, i.e. you add more edges relative to the number of nodes in a graph.

|

||||||

|

|

||||||

|

## Cuckoo Cycles

|

||||||

|

|

||||||

|

The Cuckoo Cycles algorithm is a specialised algorithm designed to solve exactly this problem, and it does

|

||||||

|

so by inserting values into a structure called a 'Cuckoo Hashtable' according to a hash which maps nodes

|

||||||

|

into possible locations in two separate arrays. This document won't go into detail on the base algorithm, as

|

||||||

|

it's outlined in plain enough detail in section 5 of the

|

||||||

|

[white paper](https://github.com/tromp/cuckoo/blob/master/doc/cuckoo.pdf). There are also several

|

||||||

|

variants on the algorithm that make various speed/memory tradeoffs, again beyond the scope of this document.

|

||||||

|

However, there are a few details following from the above that we need to keep in mind before going on to more

|

||||||

|

technical details of Grin's proof-of-work.

|

||||||

|

|

||||||

|

* The 'random' graphs, as detailed above, are not actually random but are generated by putting nodes through a

|

||||||

|

seeded hashing function, SIPHASH, generating two potential locations (one in each array) for each node in the graph.

|

||||||

|

The seed will come from a hash of a block header, outlined further below.

|

||||||

|

* The 'Proof' created by this algorithm is a set of nonces that generate the cycle, which can be trivially validated by other nodes.

|

||||||

|

* Two main parameters, as explained above, are passed into the Cuckoo Cycle algorithm that affect the probability of a solution, and the

|

||||||

|

time it takes to search the graph for a solution:

|

||||||

|

* The M/N ratio outlined above, which controls the number of edges relative to the size of the graph

|

||||||

|

* The size of the graph itself

|

||||||

|

|

||||||

|

How these parameters interact in practice is looked at in more [detail below](#mining-loop-difficulty-control-and-timing).

|

||||||

|

|

||||||

|

Now, (hopefully) armed with a basic understanding of what the Cuckoo Cycle algorithm is intended to do, as well as the parameters that affect how difficult it is to find a solution, we move on to the other portions of Grin's POW system.

|

||||||

|

|

||||||

|

# Mining in Grin

|

||||||

|

|

||||||

|

The Cuckoo Cycle outlined above forms the basis of Grin's mining process, however Grin uses Cuckoo Cycles in tandem with several other systems to create a Proof-of-Work.

|

||||||

|

|

||||||

|

### Additional Difficulty Control

|

||||||

|

|

||||||

|

In order to provide additional difficulty control in a manner that meets the needs of a network with constantly evolving hashpower

|

||||||

|

availability, a further Hashcash-based difficulty check is applied to potential solution sets as follows:

|

||||||

|

|

||||||

|

If the SHA256 hash

|

||||||

|

of a potential set of solution nonces (currently an array of 42 u32s representing the cycle nonces,)

|

||||||

|

is less than an evolving difficulty target T, then the solution is considered valid. More precisely,

|

||||||

|

the proof difficulty is calculated as the maximum target hash (2^256) divided by the current hash,

|

||||||

|

rounded to give an integer. If this integer is larger than the evolving network difficulty, the POW

|

||||||

|

is considered valid and the block is submit to the chain for validation.

|

||||||

|

|

||||||

|

In other words, a potential proof, as well as containting a valid Cuckoo Cycle, also needs to hash to a value higher than the target difficulty. This difficulty is derived from:

|

||||||

|

|

||||||

|

### Evolving Network Difficulty

|

||||||

|

|

||||||

|

The difficulty target is intended to evolve according to the available network hashpower, with the goal of

|

||||||

|

keeping the average block solution time within range of a target (currently 60 seconds, though this is subject

|

||||||

|

to change).

|

||||||

|

|

||||||

|

The difficulty calculation is based on both Digishield and GravityWave family of difficulty computation,

|

||||||

|

coming to something very close to Zcash. The refence difficulty is an average of the difficulty over a window of

|

||||||

|

23 blocks (the current consensus value). The corresponding timespan is calculated by using the difference between

|

||||||

|

the median timestamps at the beginning and the end of the window. If the timespan is higher or lower than a certain

|

||||||

|

range, (adjusted with a dampening factor to allow for normal variation,) then the difficulty is raised or lowered

|

||||||

|

to a value aiming for the target block solve time.

|

||||||

|

|

||||||

|

### The Mining Loop

|

||||||

|

|

||||||

|

All of these systems are put together in the mining loop, which attempts to create

|

||||||

|

valid Proofs-of-Work to create the latest block in the chain. The following is an outline of what the main mining loop does during a single iteration:

|

||||||

|

|

||||||

|

* Get the latest chain state and build a block on top of it, which includes

|

||||||

|

* A Block Header with new values particular to this mining attempt, which are:

|

||||||

|

|

||||||

|

* The latest target difficulty as selected by the [evolving network difficulty](#evolving-network-difficulty) algorithm

|

||||||

|

* A set of transactions available for validation selected from the transaction pool

|

||||||

|

* A coinbase transaction (which we're hoping to give to ourselves)

|

||||||

|

* The current timestamp

|

||||||

|

* A randomly generated nonce to add further randomness to the header's hash

|

||||||

|

* The merkle root of the UTXO set and fees (not yet implemented)

|

||||||

|

|

||||||

|

* Then, a sub-loop runs for a set amount of time, currently configured at 2 seconds, where the following happens:

|

||||||

|

|

||||||

|

* The new block header is hashed to create a hash value

|

||||||

|

* The cuckoo graph generator is initialised, which accepts as parameters:

|

||||||

|

* The hash of the potential block header, which is to be used as the key to a SIPHASH function

|

||||||

|

that will generate pairs of locations for each node in the graph.

|

||||||

|

* The size of the graph (a consensus value).

|

||||||

|

* An easiness value, (a consensus value) representing the M/N ratio described above denoting the probability

|

||||||

|

of a solution appearing in the graph

|

||||||

|

* The Cuckoo Cycle detection algorithm tries to find a solution (i.e. a cycle of length 42) within the generated

|

||||||

|

graph.

|

||||||

|

* If a cycle is found, a SHA256 hash of the proof is created and is compared to the current target

|

||||||

|

difficulty, as outlined in [Additional Difficulty Control](#additional-difficulty-control) above.

|

||||||

|

* If the SHA256 Hash difficulty is greater than or equal to the target difficulty, the block is sent to the

|

||||||

|

transaction pool, propogated amongst peers for validation, and work begins on the next block.

|

||||||

|

* If the SHA256 Hash difficulty is less than the target difficulty, the proof is thrown out and the timed loop continues.

|

||||||

|

* If no solution is found, increment the nonce in the header by 1, and update the header's timestamp so the next iteration

|

||||||

|

hashes a different value for seeding the next loop's graph generation step.

|

||||||

|

* If the loop times out with no solution found, start over again from the top, collecting new transactions and creating

|

||||||

|

a new block altogether.

|

||||||

|

|

||||||

|

### Mining Loop Difficulty Control and Timing

|

||||||

|

|

||||||

|

Controlling the overall difficulty of the mining loop requires finding a balance between the three values outlined above:

|

||||||

|

|

||||||

|

* Graph size (currently represented as a bit-shift value n representing a size of 2^n nodes, consensus value

|

||||||

|

DEFAULT_SIZESHIFT). Smaller graphs can be exhaustively searched more quickly, but will also have fewer

|

||||||

|

solutions for a given easiness value. A very small graph needs a higher easiness value to have the same

|

||||||

|

chance to have a solution as a larger graph with a lower easiness value.

|

||||||

|

* The 'Easiness' consensus value, or the M/N ratio of the graph expressed as a percentage. The higher this value, the more likely

|

||||||

|

it is a generated graph will contain a solution. In tandem with the above, the larger the graph, the more solutions

|

||||||

|

it will contain for a given easiness value.

|

||||||

|

* The evolving network difficulty hash.

|

||||||

|

|

||||||

|

These values need to be carefully tweaked in order for the mining algorithm to find the right balance between the

|

||||||

|

cuckoo graph size and the evolving difficulty. The POW needs to remain mostly Cuckoo Cycle based, but still allow for

|

||||||

|

reasonably short block times that allow new transactions to be quickly processed.

|

||||||

|

|

||||||

|

If the graph size is too low and the easiness too high, for instance, then many cuckoo cycle solutions can easily be

|

||||||

|

found for a given block, and the POW will start to favour those who can hash faster, precisely what Cuckoo Cycles is

|

||||||

|

trying to avoid. If the graph is too large and easiness too low, however, then it can potentially take any solver a

|

||||||

|

long time to find a solution in a single graph, well outside a window in which you'd like to stop to collect new

|

||||||

|

transactions.

|

||||||

|

|

||||||

|

These values are currently set to 2^12 for the graph size and 50% for the easiness value, however they are only

|

||||||

|

temporary values for testing. The current miner implementation is very unoptimised, and the graph size will need

|

||||||

|

to be changed as faster and more optimised Cuckoo Cycle algorithms are put in place.

|

||||||

|

|

||||||

|

### Pooling Capability

|

||||||

|

|

||||||

|

[More detail needed here] Note that contrary to some concerns about the ability to effectively pool Cuckoo Cycle mining, pooling Grin's POW

|

||||||

|

as outlined above is relatively straightforward. Members of the pool are able to prove they're working on a solution

|

||||||

|

by submitting valid proofs that simply fall under the current network target difficulty.

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

@ -24,7 +24,9 @@ use time;

|

||||||

use adapters::{ChainToPoolAndNetAdapter, PoolToChainAdapter};

|

use adapters::{ChainToPoolAndNetAdapter, PoolToChainAdapter};

|

||||||

use api;

|

use api;

|

||||||

use core::consensus;

|

use core::consensus;

|

||||||

|

use core::consensus::*;

|

||||||

use core::core;

|

use core::core;

|

||||||

|

use core::core::target::*;

|

||||||

use core::core::hash::{Hash, Hashed};

|

use core::core::hash::{Hash, Hashed};

|

||||||

use core::pow::cuckoo;

|

use core::pow::cuckoo;

|

||||||

use core::ser;

|

use core::ser;

|

||||||

|

|

@ -45,6 +47,10 @@ pub struct Miner {

|

||||||

/// chain adapter to net

|

/// chain adapter to net

|

||||||

chain_adapter: Arc<ChainToPoolAndNetAdapter>,

|

chain_adapter: Arc<ChainToPoolAndNetAdapter>,

|

||||||

tx_pool: Arc<RwLock<pool::TransactionPool<PoolToChainAdapter>>>,

|

tx_pool: Arc<RwLock<pool::TransactionPool<PoolToChainAdapter>>>,

|

||||||

|

|

||||||

|

//Just to hold the port we're on, so this miner can be identified

|

||||||

|

//while watching debug output

|

||||||

|

debug_output_id: String,

|

||||||

}

|

}

|

||||||

|

|

||||||

impl Miner {

|

impl Miner {

|

||||||

|

|

@ -62,13 +68,23 @@ impl Miner {

|

||||||

chain_store: chain_store,

|

chain_store: chain_store,

|

||||||

chain_adapter: chain_adapter,

|

chain_adapter: chain_adapter,

|

||||||

tx_pool: tx_pool,

|

tx_pool: tx_pool,

|

||||||

|

debug_output_id: String::from("none"),

|

||||||

}

|

}

|

||||||

}

|

}

|

||||||

|

|

||||||

|

/// Keeping this optional so setting in a separate funciton

|

||||||

|

/// instead of in the new function

|

||||||

|

|

||||||

|

pub fn set_debug_output_id(&mut self, debug_output_id: String){

|

||||||

|

self.debug_output_id=debug_output_id;

|

||||||

|

}

|

||||||

|

|

||||||

|

|

||||||

/// Starts the mining loop, building a new block on top of the existing

|

/// Starts the mining loop, building a new block on top of the existing

|

||||||

/// chain anytime required and looking for PoW solution.

|

/// chain anytime required and looking for PoW solution.

|

||||||

pub fn run_loop(&self) {

|

pub fn run_loop(&self) {

|

||||||

info!("Starting miner loop.");

|

|

||||||

|

info!("(Server ID: {}) Starting miner loop.", self.debug_output_id);

|

||||||

let mut coinbase = self.get_coinbase();

|

let mut coinbase = self.get_coinbase();

|

||||||

loop {

|

loop {

|

||||||

// get the latest chain state and build a block on top of it

|

// get the latest chain state and build a block on top of it

|

||||||

|

|

@ -84,21 +100,29 @@ impl Miner {

|

||||||

// transactions) and as long as the head hasn't changed

|

// transactions) and as long as the head hasn't changed

|

||||||

let deadline = time::get_time().sec + 2;

|

let deadline = time::get_time().sec + 2;

|

||||||

let mut sol = None;

|

let mut sol = None;

|

||||||

debug!("Mining at Cuckoo{} for at most 2 secs on block {} at difficulty {}.",

|

debug!("(Server ID: {}) Mining at Cuckoo{} for at most 2 secs on block {} at difficulty {}.",

|

||||||

|

self.debug_output_id,

|

||||||

self.config.cuckoo_size,

|

self.config.cuckoo_size,

|

||||||

latest_hash,

|

latest_hash,

|

||||||

b.header.difficulty);

|

b.header.difficulty);

|

||||||

let mut iter_count = 0;

|

let mut iter_count = 0;

|

||||||

if self.config.slow_down_in_millis > 0 {

|

if self.config.slow_down_in_millis > 0 {

|

||||||

debug!("Artifically slowing down loop by {}ms per iteration.",

|

debug!("(Server ID: {}) Artificially slowing down loop by {}ms per iteration.",

|

||||||

self.config.slow_down_in_millis);

|

self.debug_output_id,

|

||||||

|

self.config.slow_down_in_millis);

|

||||||

}

|

}

|

||||||

while head.hash() == latest_hash && time::get_time().sec < deadline {

|

while head.hash() == latest_hash && time::get_time().sec < deadline {

|

||||||

let pow_hash = b.hash();

|

let pow_hash = b.hash();

|

||||||

let mut miner =

|

let mut miner =

|

||||||

cuckoo::Miner::new(&pow_hash[..], consensus::EASINESS, self.config.cuckoo_size);

|

cuckoo::Miner::new(&pow_hash[..], consensus::EASINESS, self.config.cuckoo_size);

|

||||||

if let Ok(proof) = miner.mine() {

|

if let Ok(proof) = miner.mine() {

|

||||||

if proof.to_difficulty() >= b.header.difficulty {

|

let proof_diff=proof.to_difficulty();

|

||||||

|

debug!("(Server ID: {}) Header difficulty is: {}, Proof difficulty is: {}",

|

||||||

|

self.debug_output_id,

|

||||||

|

b.header.difficulty,

|

||||||

|

proof_diff);

|

||||||

|

|

||||||

|

if proof_diff >= b.header.difficulty {

|

||||||

sol = Some(proof);

|

sol = Some(proof);

|

||||||

break;

|

break;

|

||||||

}

|

}

|

||||||

|

|

@ -109,16 +133,17 @@ impl Miner {

|

||||||

}

|

}

|

||||||

iter_count += 1;

|

iter_count += 1;

|

||||||

|

|

||||||

//Artifical slow down

|

//Artificial slow down

|

||||||

if self.config.slow_down_in_millis > 0 {

|

if self.config.slow_down_in_millis > 0 {

|

||||||

thread::sleep(std::time::Duration::from_millis(2000));

|

thread::sleep(std::time::Duration::from_millis(self.config.slow_down_in_millis));

|

||||||

}

|

}

|

||||||

}

|

}

|

||||||

|

|

||||||

// if we found a solution, push our block out

|

// if we found a solution, push our block out

|

||||||

if let Some(proof) = sol {

|

if let Some(proof) = sol {

|

||||||

info!("Found valid proof of work, adding block {}.", b.hash());

|

info!("(Server ID: {}) Found valid proof of work, adding block {}.",

|

||||||

b.header.pow = proof;

|

self.debug_output_id, b.hash());

|

||||||

|

b.header.pow = proof;

|

||||||

let opts = if self.config.cuckoo_size < consensus::DEFAULT_SIZESHIFT as u32 {

|

let opts = if self.config.cuckoo_size < consensus::DEFAULT_SIZESHIFT as u32 {

|

||||||

chain::EASY_POW

|

chain::EASY_POW

|

||||||

} else {

|

} else {

|

||||||

|

|

@ -129,7 +154,8 @@ impl Miner {

|

||||||

self.chain_adapter.clone(),

|

self.chain_adapter.clone(),

|

||||||

opts);

|

opts);

|

||||||

if let Err(e) = res {

|

if let Err(e) = res {

|

||||||

error!("Error validating mined block: {:?}", e);

|

error!("(Server ID: {}) Error validating mined block: {:?}",

|

||||||

|

self.debug_output_id, e);

|

||||||

} else if let Ok(Some(tip)) = res {

|

} else if let Ok(Some(tip)) = res {

|

||||||

let chain_head = self.chain_head.clone();

|

let chain_head = self.chain_head.clone();

|

||||||

let mut head = chain_head.lock().unwrap();

|

let mut head = chain_head.lock().unwrap();

|

||||||

|

|

@ -137,8 +163,9 @@ impl Miner {

|

||||||

*head = tip;

|

*head = tip;

|

||||||

}

|

}

|

||||||

} else {

|

} else {

|

||||||

debug!("No solution found after {} iterations, continuing...",

|

debug!("(Server ID: {}) No solution found after {} iterations, continuing...",

|

||||||

iter_count)

|

self.debug_output_id,

|

||||||

|

iter_count)

|

||||||

}

|

}

|

||||||

}

|

}

|

||||||

}

|

}

|

||||||

|

|

@ -162,9 +189,11 @@ impl Miner {

|

||||||

let txs = txs_box.iter().map(|tx| tx.as_ref()).collect();

|

let txs = txs_box.iter().map(|tx| tx.as_ref()).collect();

|

||||||

let (output, kernel) = coinbase;

|

let (output, kernel) = coinbase;

|

||||||

let mut b = core::Block::with_reward(head, txs, output, kernel).unwrap();

|

let mut b = core::Block::with_reward(head, txs, output, kernel).unwrap();

|

||||||

debug!("Built new block with {} inputs and {} outputs",

|

debug!("(Server ID: {}) Built new block with {} inputs and {} outputs, difficulty: {}",

|

||||||

|

self.debug_output_id,

|

||||||

b.inputs.len(),

|

b.inputs.len(),

|

||||||

b.outputs.len());

|

b.outputs.len(),

|

||||||

|

difficulty);

|

||||||

|

|

||||||

// making sure we're not spending time mining a useless block

|

// making sure we're not spending time mining a useless block

|

||||||

let secp = secp::Secp256k1::with_caps(secp::ContextFlag::Commit);

|

let secp = secp::Secp256k1::with_caps(secp::ContextFlag::Commit);

|

||||||

|

|

@ -189,7 +218,8 @@ impl Miner {

|

||||||

let request = WalletReceiveRequest::Coinbase(CbAmount{amount: consensus::REWARD});

|

let request = WalletReceiveRequest::Coinbase(CbAmount{amount: consensus::REWARD});

|

||||||

let res: CbData = api::client::post(url.as_str(),

|

let res: CbData = api::client::post(url.as_str(),

|

||||||

&request)

|

&request)

|

||||||

.expect("Wallet receiver unreachable, could not claim reward. Is it running?");

|

.expect(format!("(Server ID: {}) Wallet receiver unreachable, could not claim reward. Is it running?",

|

||||||

|

self.debug_output_id.as_str()).as_str());

|

||||||

let out_bin = util::from_hex(res.output).unwrap();

|

let out_bin = util::from_hex(res.output).unwrap();

|

||||||

let kern_bin = util::from_hex(res.kernel).unwrap();

|

let kern_bin = util::from_hex(res.kernel).unwrap();

|

||||||

let output = ser::deserialize(&mut &out_bin[..]).unwrap();

|

let output = ser::deserialize(&mut &out_bin[..]).unwrap();

|

||||||

|

|

|

||||||

|

|

@ -153,11 +153,12 @@ impl Server {

|

||||||

/// Start mining for blocks on a separate thread. Relies on a toy miner,

|

/// Start mining for blocks on a separate thread. Relies on a toy miner,

|

||||||

/// mostly for testing.

|

/// mostly for testing.

|

||||||

pub fn start_miner(&self, config: MinerConfig) {

|

pub fn start_miner(&self, config: MinerConfig) {

|

||||||

let miner = miner::Miner::new(config,

|

let mut miner = miner::Miner::new(config,

|

||||||

self.chain_head.clone(),

|

self.chain_head.clone(),

|

||||||

self.chain_store.clone(),

|

self.chain_store.clone(),

|

||||||

self.chain_adapter.clone(),

|

self.chain_adapter.clone(),

|

||||||

self.tx_pool.clone());

|

self.tx_pool.clone());

|

||||||

|

miner.set_debug_output_id(format!("Port {}",self.config.p2p_config.port));

|

||||||

thread::spawn(move || {

|

thread::spawn(move || {

|

||||||

miner.run_loop();

|

miner.run_loop();

|

||||||

});

|

});

|

||||||

|

|

|

||||||

|

|

@ -137,7 +137,7 @@ impl Default for MinerConfig {

|

||||||

wallet_receiver_url: "http://localhost:13416".to_string(),

|

wallet_receiver_url: "http://localhost:13416".to_string(),

|

||||||

burn_reward: false,

|

burn_reward: false,

|

||||||

slow_down_in_millis: 0,

|

slow_down_in_millis: 0,

|

||||||

cuckoo_size: 0,

|

cuckoo_size: 0

|

||||||

}

|

}

|

||||||

}

|

}

|

||||||

}

|

}

|

||||||

|

|

|

||||||

|

|

@ -53,7 +53,7 @@ use core::consensus;

|

||||||

/// Just removes all results from previous runs

|

/// Just removes all results from previous runs

|

||||||

|

|

||||||

pub fn clean_all_output(test_name_dir:&str){

|

pub fn clean_all_output(test_name_dir:&str){

|

||||||

let target_dir = format!("target/{}", test_name_dir);

|

let target_dir = format!("target/test_servers/{}", test_name_dir);

|

||||||

fs::remove_dir_all(target_dir);

|

fs::remove_dir_all(target_dir);

|

||||||

}

|

}

|

||||||

|

|

||||||

|

|

@ -414,11 +414,10 @@ impl LocalServerContainerPool {

|

||||||

|

|

||||||

|

|

||||||

//Use self as coinbase wallet

|

//Use self as coinbase wallet

|

||||||

if server_config.coinbase_wallet_address.len()==0 {

|

server_config.coinbase_wallet_address=String::from(format!("http://{}:{}",

|

||||||

server_config.coinbase_wallet_address=String::from(format!("http://{}:{}",

|

|

||||||

server_config.base_addr,

|

server_config.base_addr,

|

||||||

server_config.wallet_port));

|

server_config.wallet_port));

|

||||||

}

|

|

||||||

|

|

||||||

self.next_p2p_port+=1;

|

self.next_p2p_port+=1;

|

||||||

self.next_api_port+=1;

|

self.next_api_port+=1;

|

||||||

|

|

|

||||||

|

|

@ -130,12 +130,12 @@ fn simulate_parallel_mining(){

|

||||||

env_logger::init();

|

env_logger::init();

|

||||||

|

|

||||||

let test_name_dir="simulate_parallel_mining";

|

let test_name_dir="simulate_parallel_mining";

|

||||||

framework::clean_all_output(test_name_dir);

|

//framework::clean_all_output(test_name_dir);

|

||||||

|

|

||||||

//Create a server pool

|

//Create a server pool

|

||||||

let mut pool_config = LocalServerContainerPoolConfig::default();

|

let mut pool_config = LocalServerContainerPoolConfig::default();

|

||||||

pool_config.base_name = String::from(test_name_dir);

|

pool_config.base_name = String::from(test_name_dir);

|

||||||

pool_config.run_length_in_seconds = 30;

|

pool_config.run_length_in_seconds = 60;

|

||||||

|

|

||||||

//have to select different ports because of tests being run in parallel

|

//have to select different ports because of tests being run in parallel

|

||||||

pool_config.base_api_port=30040;

|

pool_config.base_api_port=30040;

|

||||||

|

|

@ -161,7 +161,7 @@ fn simulate_parallel_mining(){

|

||||||

server_config.p2p_server_port));

|

server_config.p2p_server_port));

|

||||||

|

|

||||||

//And create 4 more, then let them run for a while

|

//And create 4 more, then let them run for a while

|

||||||

for i in 0..4 {

|

for i in 1..4 {

|

||||||

//fudge in some slowdown

|

//fudge in some slowdown

|

||||||

server_config.miner_slowdown_in_millis = i*2;

|

server_config.miner_slowdown_in_millis = i*2;

|

||||||

pool.create_server(&mut server_config);

|

pool.create_server(&mut server_config);

|

||||||

|

|

|

||||||

Loading…

Add table

Reference in a new issue